Semantically Organising Keyword Research Data with AI.

I'm in the early stages of experimenting with local LLMs (Large Language Models) to perform useful SEO tasks.

Working with AI as a copilot is a lot like having a talented intern. Someone who is there and ready to do more or less anything you can prompt them to do. The only caveat is you're training your intern constantly. It's like having a co-worker who has no memory of anything that came before.

This isn't entirely the case, of course - you can train a local LLM with data of your choosing. Deploying an LLM locally solves the biggest problem of all the chat-based LLMs - you're not divulging client data to a 3rd party. This is a good thing.

Here's a quick demo of what's possible. I'm assuming that you have exorcised the urge just to spaf AI-generated junk content all over the Internet, and you're interested in using AI to perform useful, productivity-improving tasks.

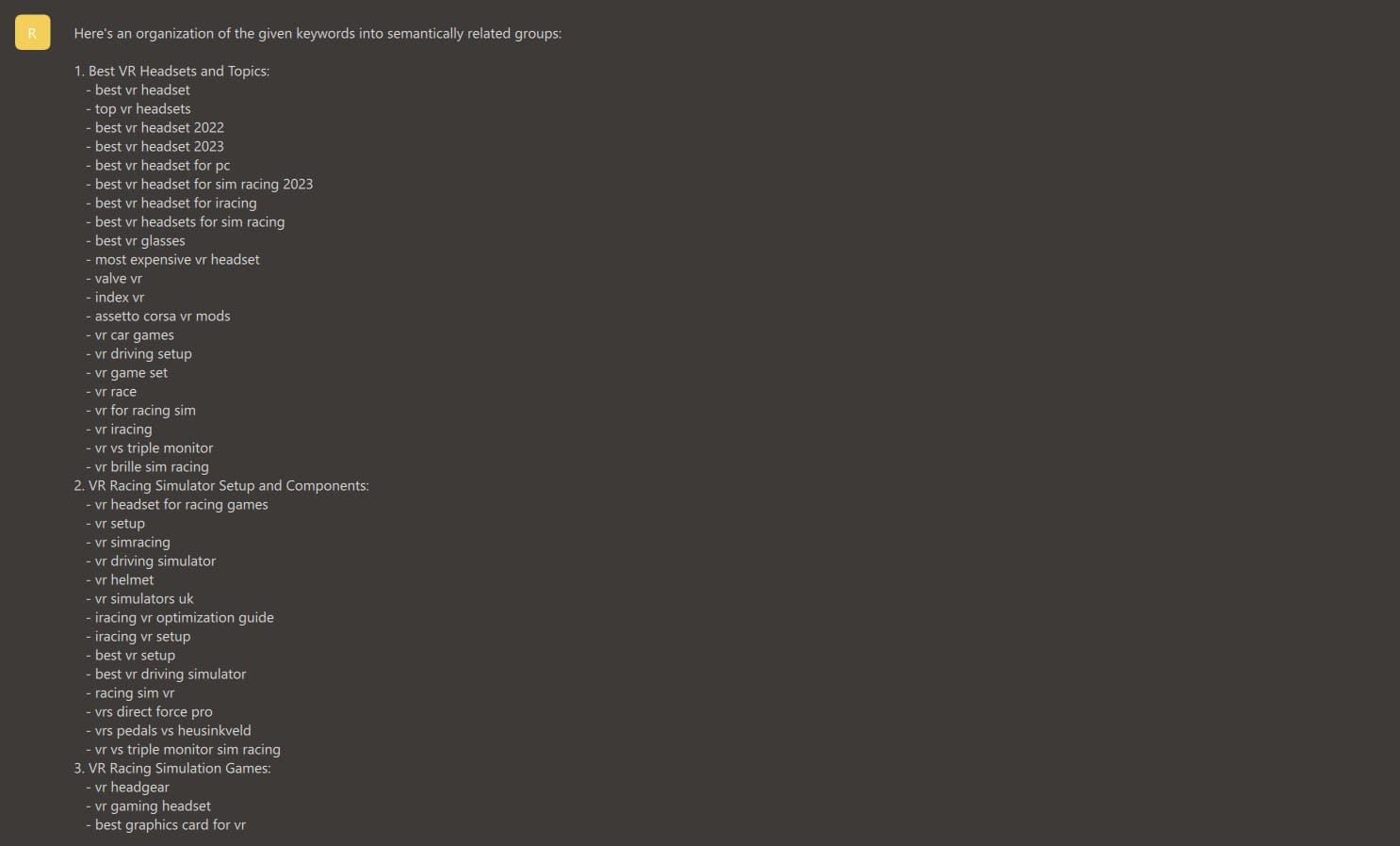

I'm using the Nous Hermes 2 Mistral DPO model for this example. Deploying it to a local machine is something we'll look at in another article. For now, just be aware that it's a chat-based model, fine-tuned by Nous Research on the OpenHermes-2.5 dataset. Here's Mistral.ai and here's the model on Hugging Face.

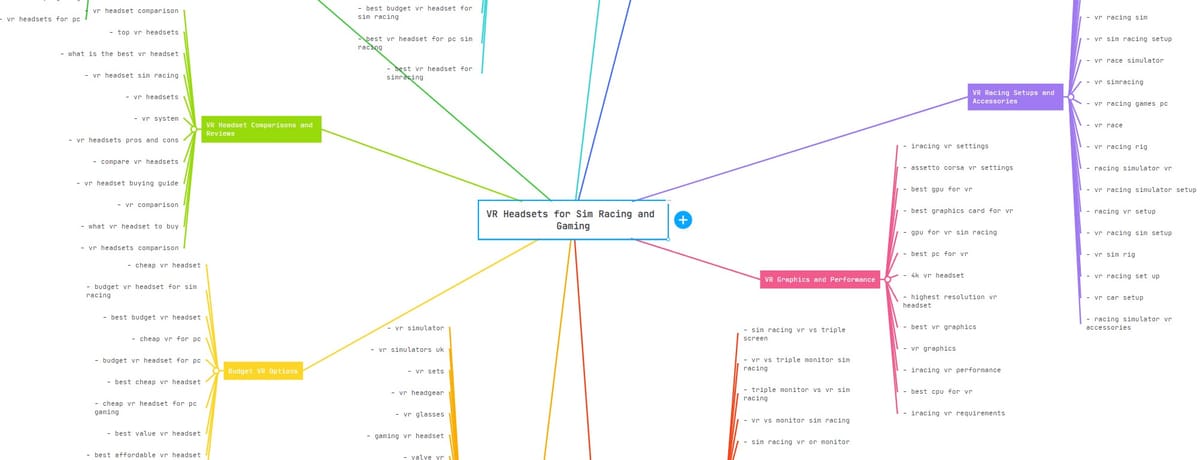

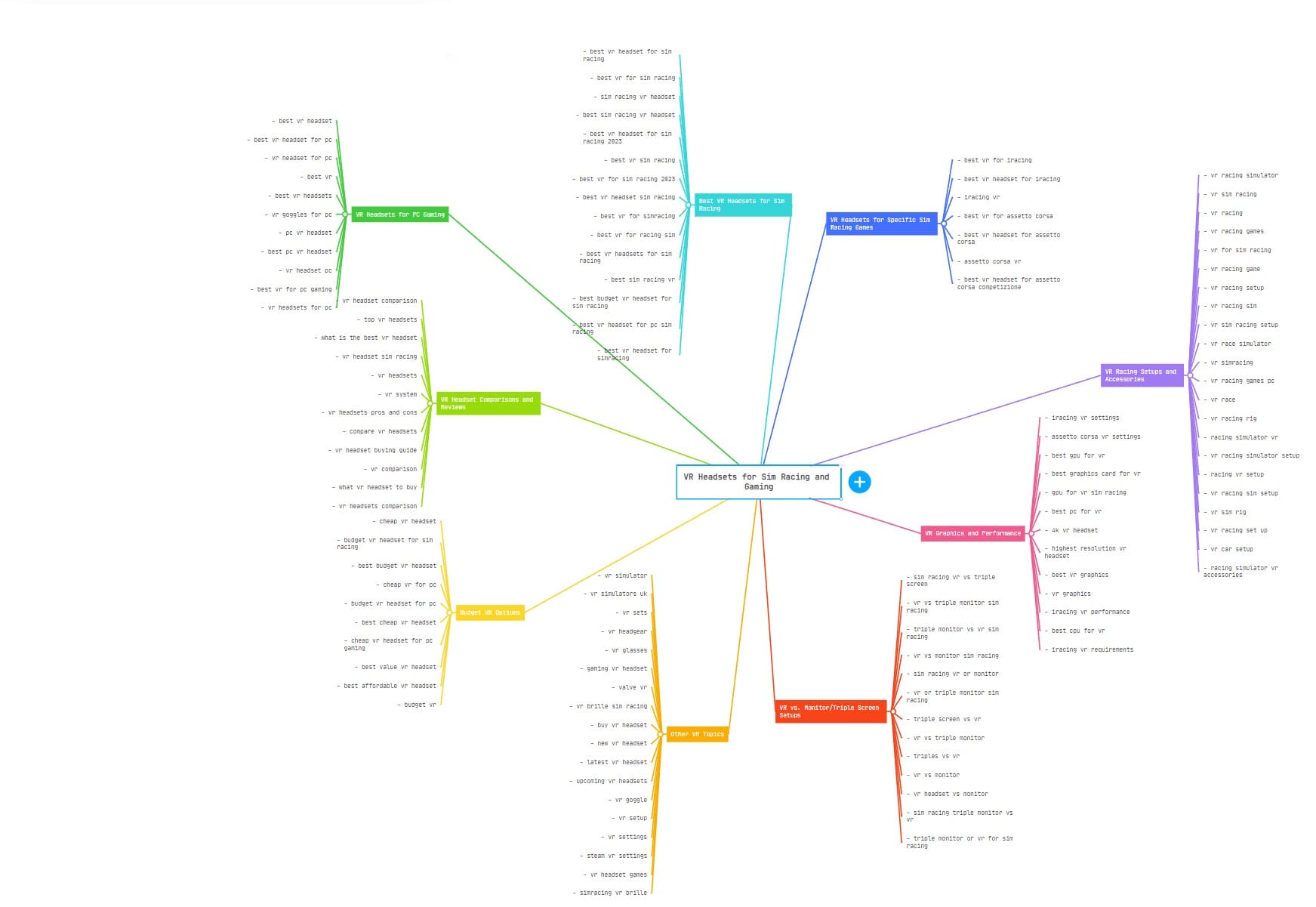

I have 1000 keyphrases, and I'd like to arrange them in a somewhat meaningful way. This output can inform how you might structure a content area on a website, with search engine performance in mind. People who are excited about improving their search engine results call this type of approach "Semantic Keyword Grouping" (or similar).

The method I used to organize the keywords into semantic groups is a combination of (AI-powered) "semantic clustering" and "keyword categorisation.". A long time ago, I used Excel to perform arbitrary keyword categorisation by using lengthy, power-hungry formulas in tables. Those days are now long gone. The RTX 3080 GPU in my work machine is plenty for this type of work.

Semantic clustering involves grouping keywords that are related or share similar meanings, concepts, or themes. Organising keywords in this way helps you identify the main topics and subtopics within the group. By looking at these topics, a content team can easily derive content ideas and groups. It also informs the internal link structure of the content section of a site.

In my example I:

- Exported as much keyword data as I could from Google Search Console,

- Gave that list to an AI model with a simple prompt,

- Allowed the AI model to name each semantic group,

- Imported that structure into MindMeisterwell-organised to make it visual and somewhat interactive.

Keyword categorisation is the process of organising keywords into predefined categories (or, "buckets"). The outcome of this process is a set of well-organised keywords into AI-defined categories. You can, of course, edit these and move things around, but the point is that this is a better approach than simply hammering away at a Spreadsheet.

By clustering your keywords with a local LLM, you can gain a number of useful efficiencies:

- The process is incredibly quick. What took a day now takes minutes.

- Your data is secure if you're using a local LLM. I haven't seen an incident where keyword research data influences an LLM but there are many concerns in the tech industry about the nature of using proprietary, confidential data

- You get to create a properly structured view of the keyword landscape, gaining rapid insight into the relationships between your keywords and the topics they best fit.

I suppose the coolest part of all of this is that it's very easy to stop, throw the work away and start again.

Back when it took minutes simply to organise one column of keyword data in Excel, there was a sense of process-orientated sunk cost. You've started this marathon, you must finish!

Not so much anymore.