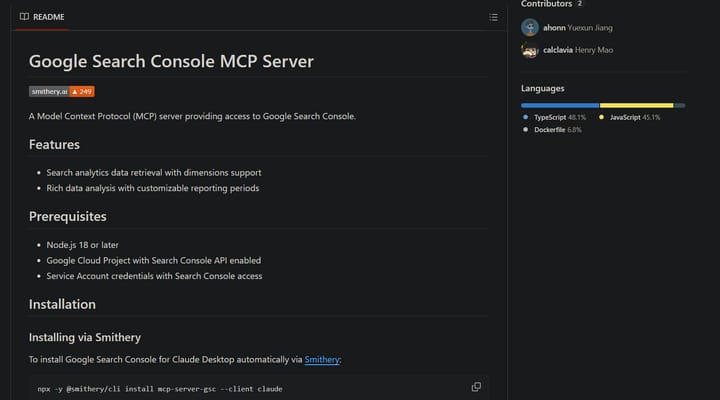

How to Set Up Claude Desktop with Google Search Console MCP

Claude Desktop's ability to support access to API services using Model Context Protocol (MCP) is impressive stuff. You can actually get Claude connected to the Search Console API and query it to answer your questions. I think that's worth a deep dive.

While this solution really

![Using Claude.ai to Write [Simple, Front End] Code.](/content/images/size/w720/2024/03/blog-header-simracingcockpit-1.jpg)